Question: I have a two-disk RAID-1 array in mdadm, and I purchased two more disks. How can I grow the array to use the new disks?

Answer: This took some strategy and time. In short, we install the disks, create a new RAID-10 with two missing disks, copy data over, and replace disks one at a time.

Assumptions

We will make a few assumptions:

- The disks are the same size

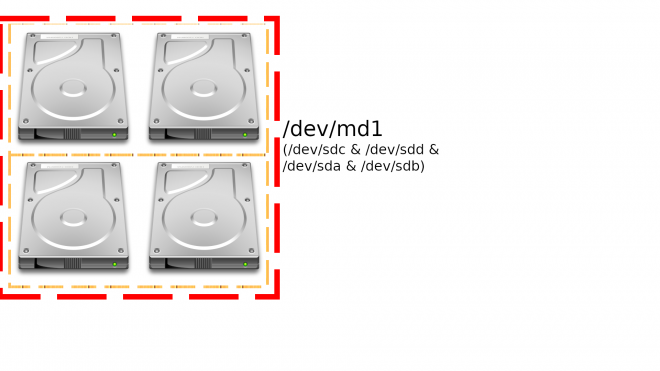

- The current RAID1 array is identified at

/dev/md0, also meaning you havemdadminstalled - The current disks are identified as

/dev/sdaand/dev/sdb - The new disks are identified as

/dev/sdcand/dev/sdd

If any of the above is incorrect, adjust the commands below accordingly.

Preparing

First, let’s make a backup. As with any operation that changes partition tables or touches your data en masse, you need to create a good, working backup.

Once you are confident your data is safe, physically install the two new hard drives. They should be detected as /dev/sdc and /dev/sdd. To find out, you can run fdisk –l to list the drives, and any partitions that may be on it.

Partitioning

You will need to create partitions as Linux RAID. You can use fdisk or parted for this step.

It is important to match the partition sizes with the current drives, especially for the /boot partition. The partition will be a RAID 1, while the rest of your data can be a RAID 10.

Array Construction

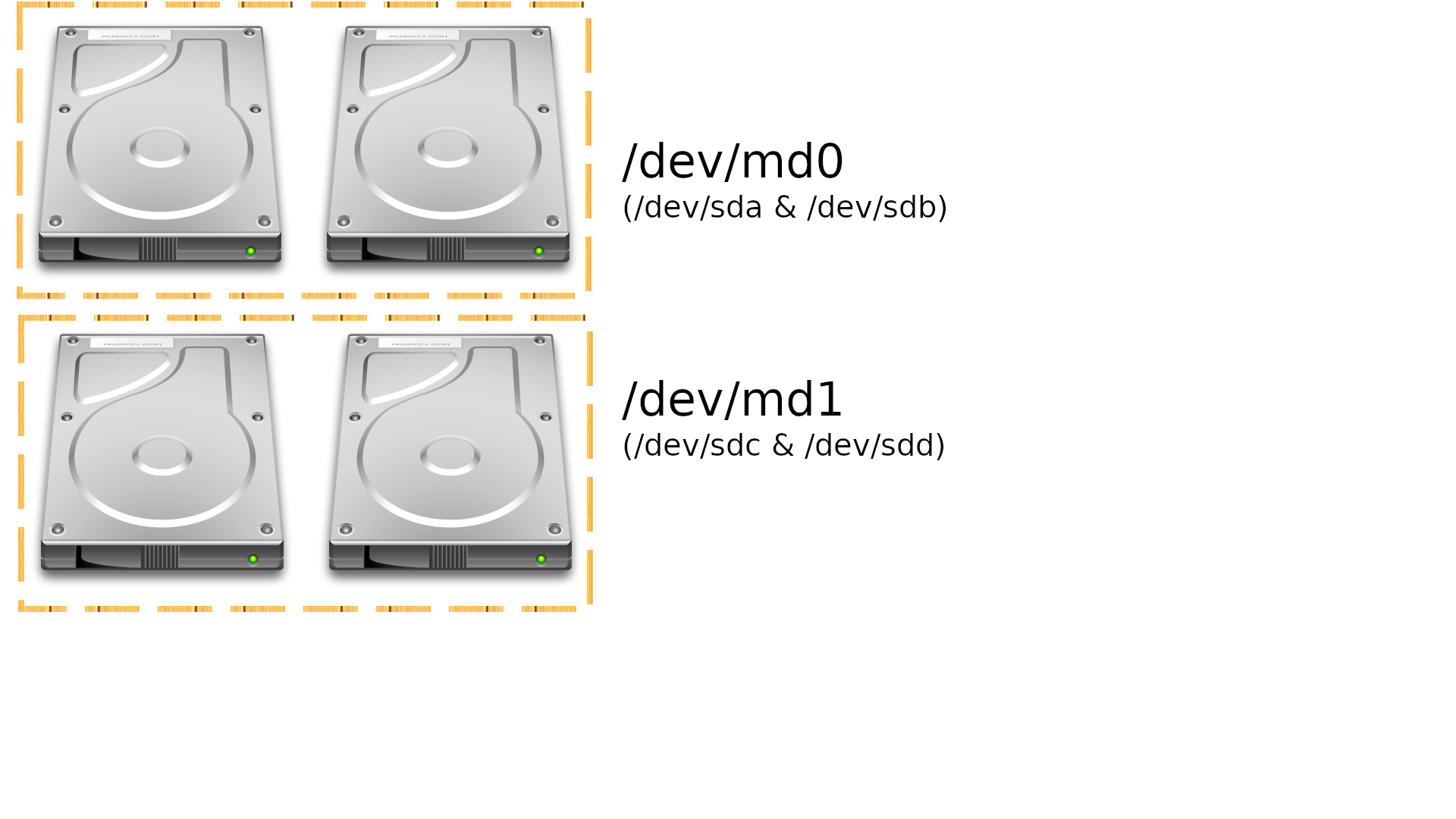

Next, we are going to create a new RAID10 array with our two new drives, and two “missing” drives. RAID10 typically works with 4 drives minimum, but can survive with two missing drives (if they’re the right missing drives).

The next command is:

mdadm –v –create /dev/md1 –level=raid10 –raid-devices=4 /dev/sdc1 missing /dev/sdd1 missing

The parameters for this command:

-vfor verbose output--createto create a new mdadm device (/dev/md1)--level=raid10to set a striping mirror array (aka RAID 10)--raid-devices=4to expect 4 drives for a fully-populated RAID array/dev/sdc1for device Amissingfor device B/dev/sdd1for device Cmissingfor device D

To check the status of an array, you can use cat /proc/mdstat. It should complain that two devices are missing, but that the array is operational.

Copy your old data to the new array

Create a new directory that you can use to mount the array, and begin copying your “live” data over.

mkdir /mnt/raid

mount /dev/md1 /mnt/raid

rsync –avP /path/to/data /mnt/raid/

Start failing the old RAID

Once your data is copied over, we can start failing your old array. In a RAID1 configuration, you can lose any single drive, and maintain your data; in a RAID0 configuration, you can’t afford to lose ANY drive and maintain data.

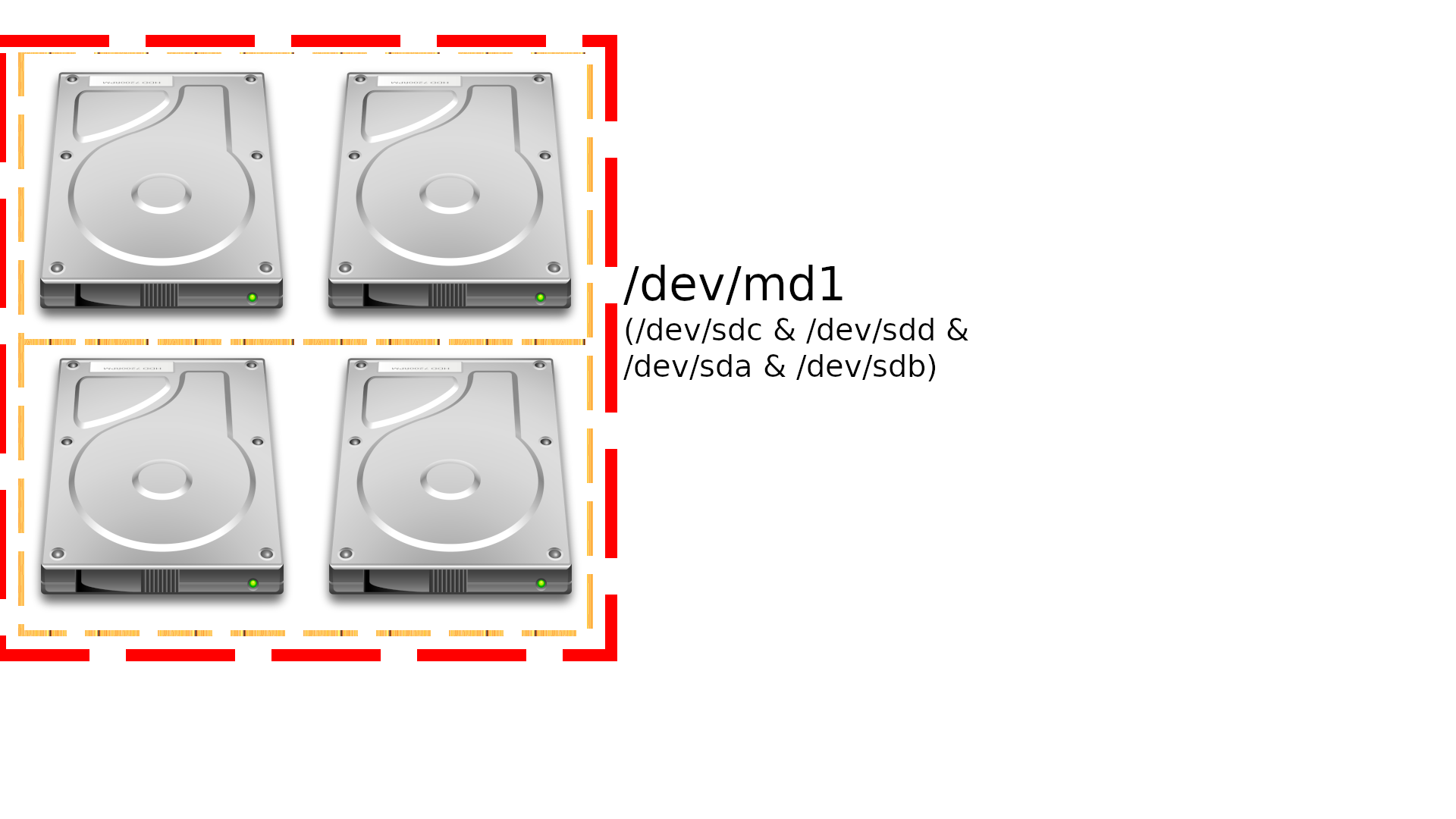

Once you are ready, run mdadm /dev/md0 –fail /dev/sda1 –remove /dev/sda1. Assuming that the partition table is setup exactly as the new drives, you can add it then to the new array with mdadm /dev/md1 –add /dev/sda1.

You will need to repeat the above command with any other Linux RAID partitions you need to setup from one drive to the rest (i.e. /boot).

I do not recommend stressing the system as it’s syncing the drives. To watch the status, you can run watch cat /proc/mdstat (watch is an apt package, if it’s not installed). Once it has fully added the first hard drive, you can repeat the above two commands with the second drive.

Removing the old array

You’ll notice if you attempt to restart the system that Linux will complain about /dev/md0 not being accessible. We need to tell Linux that this array is dead, gone, shot, buried, etc. To fully remove the old array, use mdadm –stop /dev/md0. The old array should not be reporting errors now, and you can continue with your day.

Bootloader Considerations

Next, you’ll need to check that your bootloader can still access your new array (if applicable). On Debian with Grub, it is simple enough to run dpkg-reconfigure grub-pc, and follow the prompts.

If you are getting errors when trying to install Grub again, verify that the partition holding your

/bootpartition has finished syncing. If not, errors are known to happen. Try again.

As of the writing of this post, Grub does not support RAID10 /boot partitions. This means you’ll need to create a partition as RAID 1 across your drives just for the /boot partition. (You did heed my warning above, right?)

The /boot partition does not need to be big. Out of all the servers I have built, 100 MB has been more than enough. Please remember to include this in your planning!

Note: I posted this on Super User many years ago. It has many views, so I figured I’d do a post about it. This was tested by me when restoring my server many years ago